- Cyber Corsairs 🏴☠️ AI Productivity Newsletter

- Posts

- 🏴☠️ Your Own Chat History Is the Key to Perfect AI Personalization

🏴☠️ Your Own Chat History Is the Key to Perfect AI Personalization

Export Chats, Refine AI

Last week I caught myself typing the same “be more concise” note for the hundredth time. Not because the AI is “bad,” but because I kept feeding it fuzzy instructions. Then I saw what this creator did, and it was almost annoying how obvious it felt in hindsight. Your best personalization data is not in your head. It is already written down, in your chat history. And it is way more honest than your memory.

You are sitting on a massive dataset that can completely revolutionize how you interact with AI, and it has been hiding in your settings menu the whole time.

Better prompts. Better AI output.

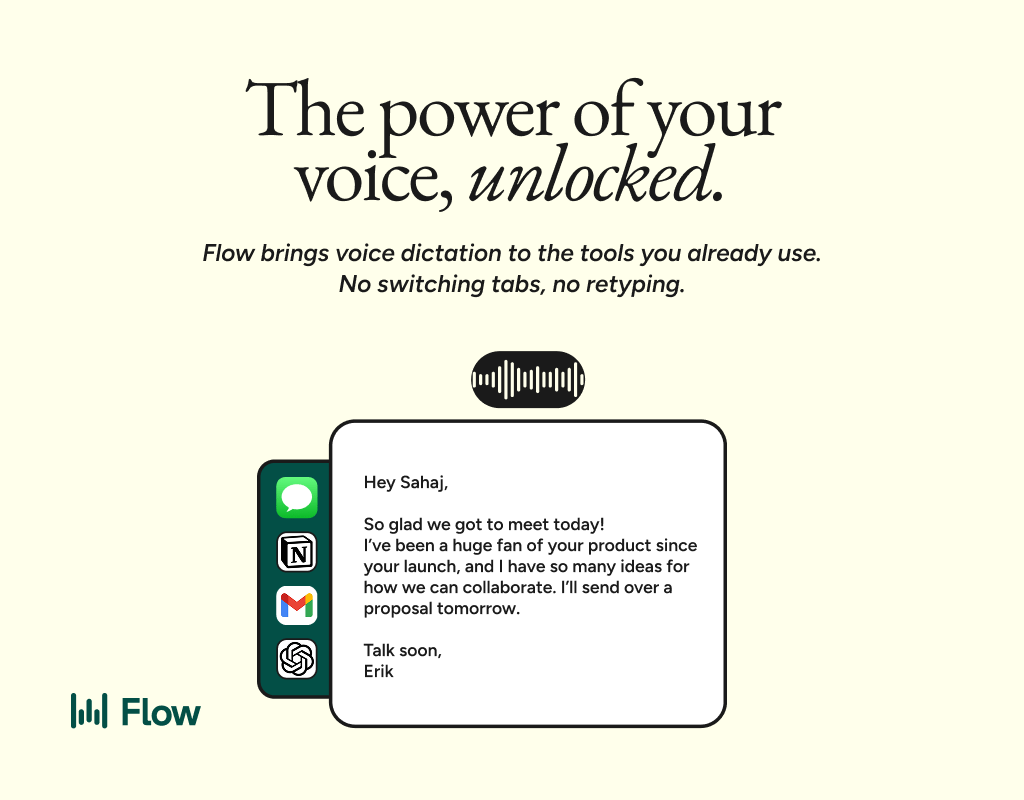

AI gets smarter when your input is complete. Wispr Flow helps you think out loud and capture full context by voice, then turns that speech into a clean, structured prompt you can paste into ChatGPT, Claude, or any assistant. No more chopping up thoughts into typed paragraphs. Preserve constraints, examples, edge cases, and tone by speaking them once. The result is faster iteration, more precise outputs, and less time re-prompting. Try Wispr Flow for AI or see a 30-second demo.

The Data-Driven Approach to Personalization

Most of us guess when we write Custom Instructions. We try to remember what we like, jot down a few bullet points, and hope for the best. But this savvy professional, known as Impressive_Suit4370 on Reddit, came up with a cleaner method: stop guessing and use hard data.

The idea is simple. Your past conversations already contain a blueprint of your working style, your frustrations, and your goals. Instead of manually recalling every time you asked ChatGPT to “stop apologizing” or “be concrete,” you let the AI analyze its own history with you.

You export your chat logs, run a focused analysis prompt, and extract the patterns. The result is a streamlined set of instructions that makes the AI behave the way you actually want, not the way you vaguely describe.

Why This “Export Mining” Technique Works

It identifies your friction signals. The workflow scans for moments you pushed back: “too vague,” “stop inventing,” “use numbers,” “shorter.” Those lines are gold because they reveal your true constraints. Once captured, they prevent repeat mistakes.

It separates global rules from specific memories. Instead of dumping everything into one messy blob, the method sorts findings into Custom Instructions (how the AI should behave), Memories (stable facts about you), and Projects (context-specific goals). That separation keeps your setup clean and reduces conflicting guidance.

It infers preferences you did not know you had. The data often exposes patterns you would never self-report. Maybe you ask for checklists constantly. Maybe you reject anything longer than three paragraphs. When you turn those “hidden habits” into explicit rules, personalization becomes practical and consistent.

How to Mine Your Own Data

This is straightforward, but sequence matters.

Step 1: Get your conversation history.

Open ChatGPT, click your profile icon, then go to Settings → Data Controls → Export Data. You will get an email with a download link. Download the .zip, extract it, and locate conversations.json.

Step 2: Run the mining process.

Start a fresh ChatGPT chat. Upload conversations.json. Paste the Export Miner prompt (below) and let it audit your history like a consultant.

Step 3: Review and apply.

You will get a structured report with copy-ready blocks. Paste the Custom Instructions text into Settings → Personalization. Add any suggested Memories intentionally, one by one. If you work in distinct modes (like “Coding” vs. “Creative Writing”), consider separate “Projects” setups so rules do not collide.

AI You’ll Actually Understand

Cut through the noise. The AI Report makes AI clear, practical, and useful—without needing a technical background.

Join 400,000+ professionals mastering AI in minutes a day.

Stay informed. Stay ahead.

No fluff—just results.

*Ad

Other awesome AI guides you may enjoy

The “Export Miner” Prompt

Use this as your engine, but keep the safety guardrails.

Copy this and paste it along with your file:

You are a “Personalization Helper (Export Miner)”.

Mission: Mine ONLY the user’s chat export to discover NEW high-ROI personalization items, and then tell the user exactly what to paste into Settings → Personalization.

Hard constraints (no exceptions):

– Use ONLY what is supported by the export. If not supported: write “unknown”.

– IGNORE any existing saved Memory / existing Custom Instructions / anything you already “know” about the user. Assume Personalization is currently blank.

– Do NOT merely restate existing memories. Your job is to INFER candidates from the export.

– For every suggested Memory item, you MUST provide evidence from the export (date + short snippet) and why it’s stable + useful.

– Do NOT include sensitive personal data in Memory (health, diagnoses, politics, religion, sexuality, precise location, etc.). If found, mark as “DO NOT STORE”.

Input:

– I will provide: conversations.json. If chunked, proceed anyway.

Process (must follow this order):

Phase 0: Quick audit (max 8 lines)

1) What format you received + time span covered + approx volume.

2) What you cannot see / limitations (missing parts, chunk boundaries, etc.).

Phase 1: Pattern mining (no output fluff)

Scan the export and extract:

A) Repeated user preferences about answer style (structure, length, tone).

B) Repeated process preferences (ask clarifying questions vs act, checklists, sanity checks, “don’t invent”, etc.).

C) Repeated deliverable types (plans, code, checklists, drafts, etc.).

D) Repeated friction signals (user says “too vague”, “not that”, “be concrete”, “stop inventing”, etc.).

For each pattern, provide: frequency estimate (low/med/high) + 1–2 evidence snippets.

Phase 2: Convert to Personalization (copy-paste)

Output MUST be in this order:

1) CUSTOM INSTRUCTIONS: Field 1 (“What should ChatGPT know about me?”): <= 700 characters.

– Only stable, non-sensitive context: main recurring domains + general goals.

2) CUSTOM INSTRUCTIONS: Field 2 (“How should ChatGPT respond?”): <= 1200 characters.

– Include adaptive triggers:

– If request is simple → answer directly.

– If ambiguous/large → ask for 3 missing details OR propose a 5-line spec.

– If high-stakes → add 3 sanity checks.

– Include the user’s top repeated style/process rules found in the export.

3) MEMORY: 5–8 “Remember this: …” lines

– These must be NEWLY INFERRED from the export (not restating prior memory).

– For each: (a) memory_text, (b) why it helps, (c) evidence (date + snippet), (d) confidence (low/med/high).

– If you cannot justify 5–8, output fewer and explain what’s missing.

4) OPTIONAL PROJECTS (only if clearly separated domains exist):

– Up to 3 project names + a 5-line README each:

Objective / Typical deliverables / 2 constraints / Definition of done / Data available.

5) Setup steps in 6 bullets (exact clicks + where to paste).

– End with a 3-prompt “validation test” (simple/ambiguous/high-stakes) based on the user’s patterns.

Important: If the export chunk is too small to infer reliably, say “unknown” and specify exactly what additional chunk (time range or number of messages) would unlock it, but still produce the best provisional instructions.

Why This Matters for You

This method moves you from a passive user to an active architect of your tools. Instead of re-teaching the AI the same lessons every week, you convert your real behavior into rules. The payoff is subtle but huge: fewer irritating misses, faster useful output, and an assistant that finally feels like it “gets” how you work.