- Cyber Corsairs 🏴☠️ AI Productivity Newsletter

- Posts

- 🏴☠️ Stop Prompting Like It’s GPT-4

🏴☠️ Stop Prompting Like It’s GPT-4

Less Prompting, More Restraint

You are likely prompting the newest AI models completely wrong because you are stuck in 2023 habits.

We spent years learning how to coax intelligence out of chatbots, but the game has shifted with the latest iterations. In a LinkedIn post, an AI professional has been stress-testing what he calls “GPT-5.2,” and his take flips standard prompt engineering on its head. The lesson: our job is less about pushing models harder and more about holding them back.

That is a massive mindset shift for anyone working with Generative AI.

Using a VPN (Virtual Private Network) is a powerful tool for keeping your online banking secure. What’s a VPN? It sounds techy but don’t despair — it’s a super simple tool that will mask your IP address and shield your online activity. Think of your IP address like the address of your house — just as your home address tells people where to send letters or packages, an IP address tells websites, apps, and other devices where to send information you’ve requested. And it also makes it easy for hackers to access your sensitive information like credit card numbers and passwords.

The Shift from Activation to Restraint

Newer, high-reasoning models can be too helpful. In the GPT-3.5 and early GPT-4 days, you had to be prescriptive—break tasks down and ask for step-by-step thinking—to avoid shallow or hallucinated output. Now the dynamic can invert: like over-eager, genius interns, they add explanations, alternatives, and stylistic flourishes you didn’t ask for.

The author argues the new “prompt engineering” is constraint, not activation. If you don’t set hard boundaries, capability becomes friction instead of leverage. You’re steering a Ferrari already at speed, not trying to start an engine.

Managing the “Over-Helpful” AI

His approach centers on precision, role clarity, and deliberately managing “thinking” features. The goal is to get exactly what you need, not a helpful essay.

Explicitly Define What NOT To Do

Negative constraints matter more than ever. Smarter models assume intent and fill gaps with extra fluff that can ruin user experience or utility. Use blunt commands like “No extras,” “No embellishments,” or “Code only,” and trade “thoroughness” for “exactness.”

Outcome Over Process

Long play-by-play instructions can be counterproductive for high-reasoning models. They already infer the logical steps, and forcing a rigid process can block better reasoning paths. Define the success state instead: audience, deliverable, and what “done” means.

For example, rather than dictating an email line by line, specify: “Success is convincing [Audience] to sign up for [Webinar].” Let the model choose the best route to that target.

Precision in Length and Logic

Vague adjectives like “short” or “detailed” are risky. Replace them with hard caps such as “Max 3 sentences” or “5 bullet points exactly” to force information density. Also, toggle “Thinking Mode” or “Extended Thinking” only when the task warrants it, and add a default like: “If unclear, choose the simpler option.”

The Nuance of Adaptation

The hardest part is muscle memory from 2023-style prompting: long preambles and heavy micromanagement. You need to trust the model’s reasoning while aggressively gatekeeping format and verbosity. That balance is the new skill.

Turn AI Into Your Income Stream

The AI economy is booming, and smart entrepreneurs are already profiting. Subscribe to Mindstream and get instant access to 200+ proven strategies to monetize AI tools like ChatGPT, Midjourney, and more. From content creation to automation services, discover actionable ways to build your AI-powered income. No coding required, just practical strategies that work.

*Ad

Other awesome AI guides you may enjoy

Credits Ruben Hassid

Captain YAR’s Curated Toolkit

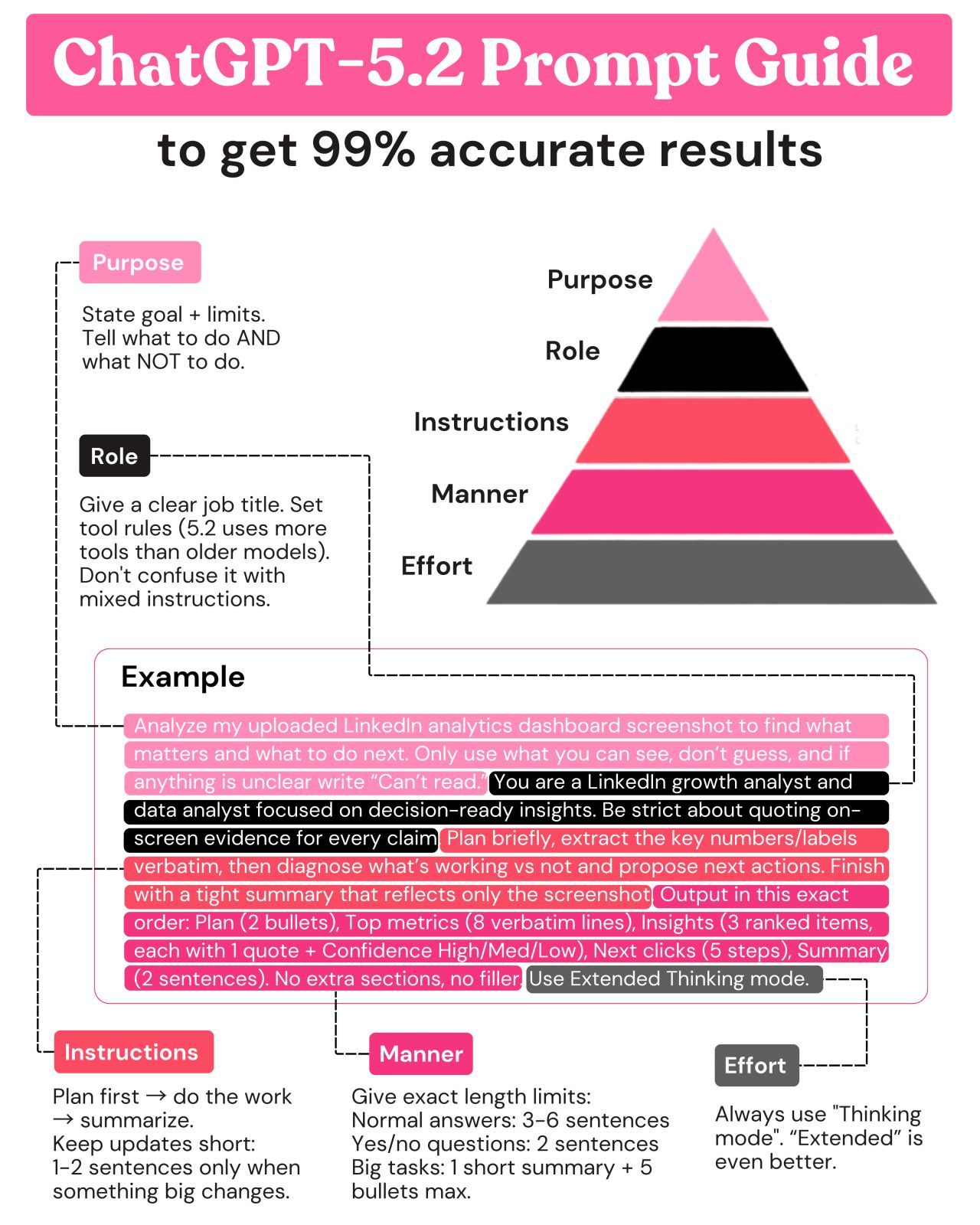

Based on the creator’s rules, here is a prompt structure for next-gen models.

The “New Era” System Prompt

Role: You are [Expert Role]. If instructions are mixed or unclear, default to the simpler choice.

Constraint: Do exactly what I ask. No extras. No embellishments. Do not add conversational filler.

Output: Adhere to strict length limits. If I say “short,” I mean 3–5 sentences.

Goal: Focus on goal completion. I will consider this successful when you [Target Outcome] for [Audience].200+ AI Side Hustles to Start Right Now

AI isn't just changing business—it's creating entirely new income opportunities. The Hustle's guide features 200+ ways to make money with AI, from beginner-friendly gigs to advanced ventures. Each comes with realistic income projections and resource requirements. Join 1.5M professionals getting daily insights on emerging tech and business opportunities.

*Ad

Quick Tips from the Expert

Toggle thinking: For logic puzzles or code, explicitly request “Extended Thinking” mode.

Hard caps: Never use relative size words; always use integers (e.g., “Max 2 bullets”).

Stop the chat: If the model starts explaining itself, interrupt and reiterate: “No explanation. Output only.”

I highly recommend reading the full breakdown to see the specific examples the author tested. It is a brilliant look at where prompt engineering is heading next.