- Cyber Corsairs 🏴☠️ AI Productivity Newsletter

- Posts

- 🏴☠️ ChatGPT vs. Claude

🏴☠️ ChatGPT vs. Claude

AI Model Showdown

I watched this talented creator’s breakdown and had that rare feeling: “Oh, this is going to get messy.” Not because the tech is bad, but because the stakes are suddenly public. Two companies that normally hide behind product pages are now throwing punches in the open. The wild part is how coordinated it feels, like each move is designed to steal oxygen from the other. If you’ve ever refreshed a launch page at the exact wrong moment, you know the vibe. This isn’t just an AI upgrade cycle anymore, it’s a showdown.

The headline drama is marketing, but the real pressure is underneath it. One side has the mindshare, the other has the “serious builders” reputation, and both want to own developers before the next wave of AI agents becomes normal. The creator framed it like a David versus Goliath story, and even if you don’t love that analogy, the tension is real.

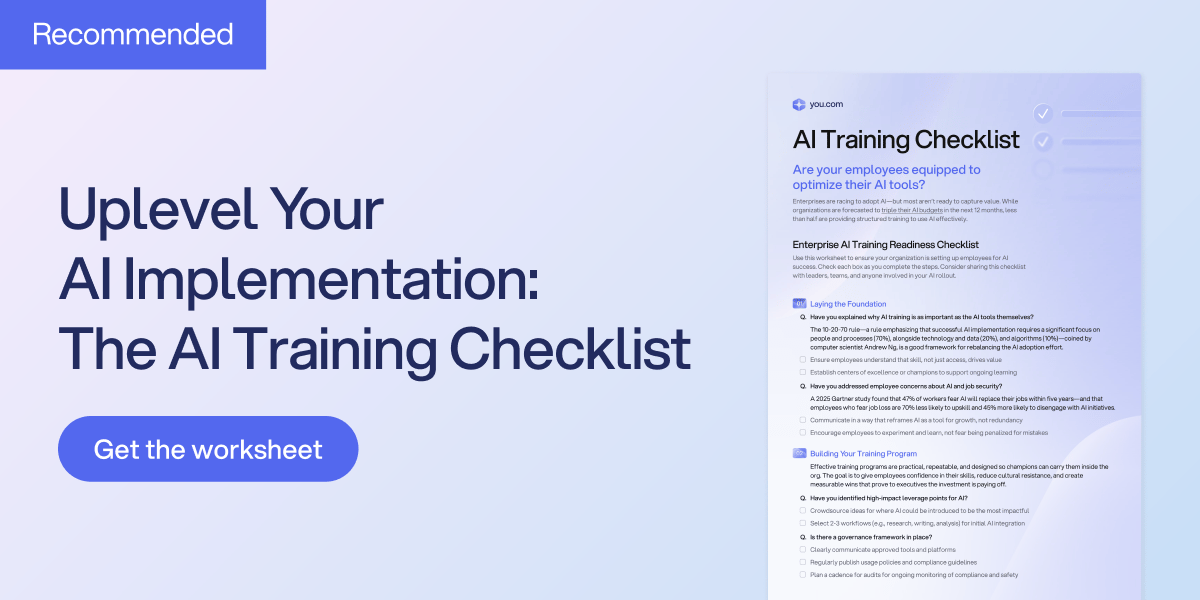

When training takes a backseat, your AI programs don't stand a chance.

One of the biggest reasons AI adoption stalls is because teams aren’t properly trained. This AI Training Checklist from You.com highlights common pitfalls and guides you to build a capable, confident team that can make the most out of your AI investment. Set your AI initiatives on the right track.

*Ad

A key detail from the breakdown was the user gap. ChatGPT sits in the “hundreds of millions” conversation, while Claude is much smaller at around 15.5 million active users. The creator also noted that other tools are currently pulling more usage than Claude, even while Claude gets constant praise for coding. That mismatch is gasoline on the rivalry, because perception and adoption don’t always move together.

The Simultaneous Model Strike

Here’s where it gets interesting: both companies dropped their most powerful coding-focused models within about an hour of each other. Anthropic released Claude Opus 4.6, then 15 to 60 minutes later OpenAI answered with GPT 5.3 Codex. That timing doesn’t feel accidental, it feels like a fight for the same headline and the same developer attention. In a world where news cycles last a few hours, minutes matter.

The creator pointed out that Anthropic allegedly moved up the release time by around 15 minutes to land first. Both launches are aimed at coders and agentic workflows, meaning “do the work” systems, not just chat. That’s a quiet shift: we’re moving from fun conversations to tools that can actually ship software and chew through huge datasets.

The Super Bowl Ad Wars and the Streisand Effect

The spiciest piece was the Super Bowl campaign. Anthropic’s ads show a warm, intimate AI moment, like helping someone communicate better with their mother, and then slam the brakes with a random product placement. The message is obvious: ads would poison personal AI interactions.

The creator also added a reality check: OpenAI has said ads won’t appear inside the response text itself. But the conflict escalated when Sam Altman responded publicly and at length on X, calling the ads dishonest. And that’s where the Streisand Effect kicks in, because the defense can accidentally broadcast the attack to a much bigger audience than it would’ve reached on its own.

Claude Opus 4.6: The Context Window King

On the product side, Claude’s standout flex is scale. Opus 4.6 is described as having a 1 million token context window, which the creator translated into roughly 750,000 words of working memory. For non-technical readers, that means you can drop in an entire codebase or massive documentation and the model can keep the whole thing “in mind” at once.

That matters because good engineering is about architecture, not isolated snippets. If the model can see dependencies, naming patterns, and internal logic, it can suggest fixes that don’t break everything else. The creator also mentioned strong benchmark claims and “adaptive thinking,” where the model decides how long to think depending on the problem.

GPT 5.3 Codex: The Self-Improving Machine

OpenAI’s counter is compelling for a different reason: how it was trained. The creator explained that the team used early versions of the model to debug and improve parts of its own training process. That’s the recursion moment people talk about, where AI starts accelerating its own development. If that loop tightens, release cycles won’t just be faster, they’ll feel relentless.

In a simple real-world test, the creator asked both models to build a landing page for a surfboard company from one prompt. Claude finished faster and produced a clean, functional site. GPT’s version was a little more stylish, with more modern polish and nicer entrance animations, and the broader point is that the gap is now “taste and workflow,” not “one is obviously better.”

What 100K+ Engineers Read to Stay Ahead

Your GitHub stars won't save you if you're behind on tech trends.

That's why over 100K engineers read The Code to spot what's coming next.

Get curated tech news, tools, and insights twice a week

Learn about emerging trends you can leverage at work in just 10 mins

Become the engineer who always knows what's next

*Ad

Other awesome AI guides you may enjoy

Power User Tip: The “Vibe Coding” Workflow

Maximize the context: if you’re using Claude Opus 4.6, don’t ask for a single script. Upload the whole project or a big chunk of docs so it can behave like a senior dev who knows your system.

Visual refinement: if you care about front-end feel, run your design prompts through GPT 5.3 Codex and push for modern layout, spacing, and motion.

The double check: for anything important, have them review each other. Let one generate, and the other audit for security, edge cases, and efficiency.

This battle between giants is ultimately a win for us users, as it keeps prices competitive and innovation rapid. If you want to see the full visual breakdown of the surfboard website test, you should definitely check out the full video.