- Cyber Corsairs 🏴☠️ AI Productivity Newsletter

- Posts

- 🏴☠️ ChatGPT Isn’t Really Smart

🏴☠️ ChatGPT Isn’t Really Smart

3 Reasons it Hallucinates

ChatGPT isn’t actually “smart” in the way you think it is. It is essentially a glorified auto-complete engine running on supercomputers, yet it manages to mimic human reasoning terrifyingly well.

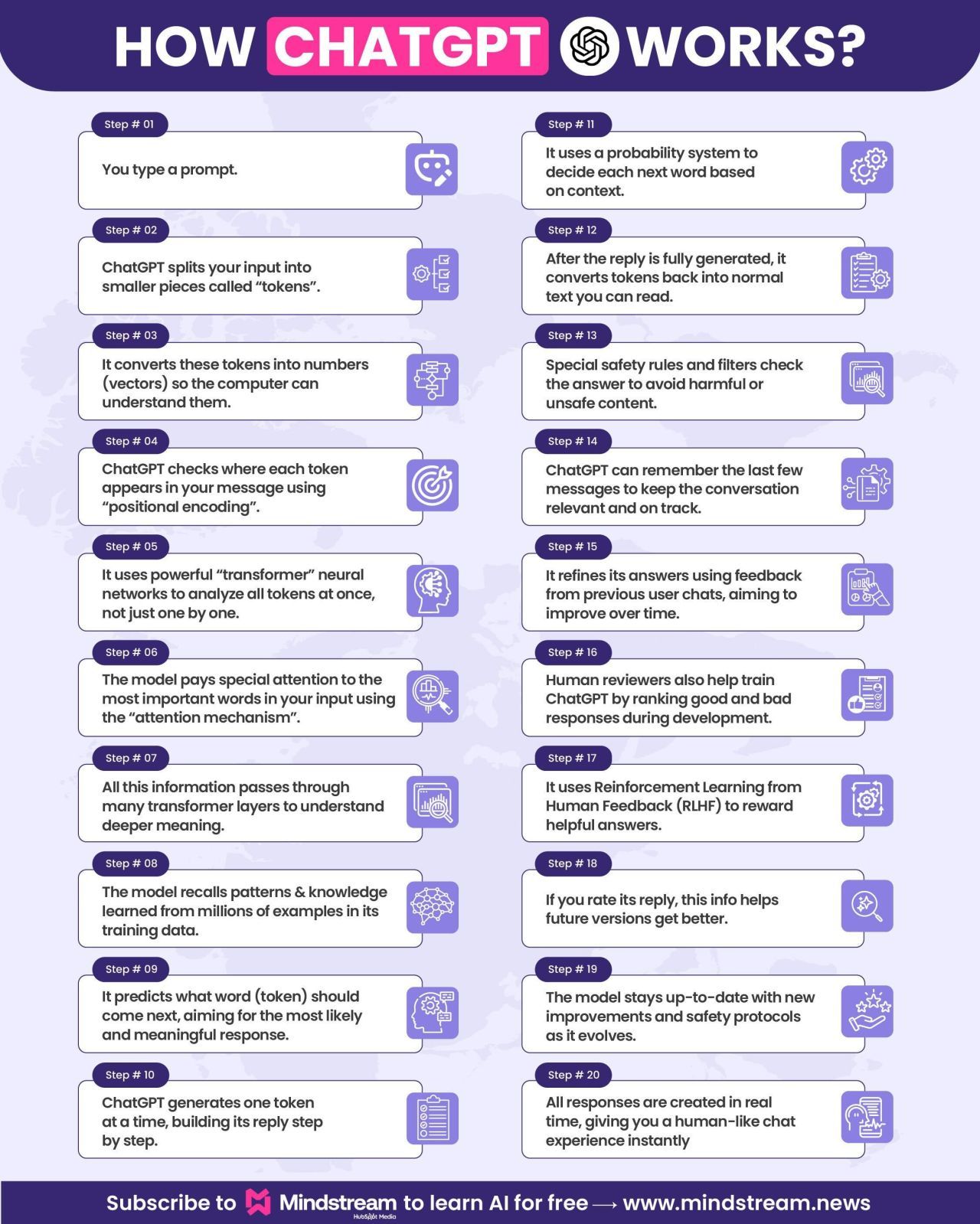

It feels like magic when it replies in seconds, but under the hood, it represents a complex dance of statistics and vectors. I found a brilliant breakdown by a LinkedIn creator that peels back the layers of this technology to show exactly what happens between your input and the AI’s output.

Turn AI Into Extra Income

You don’t need to be a coder to make AI work for you. Subscribe to Mindstream and get 200+ proven ideas showing how real people are using ChatGPT, Midjourney, and other tools to earn on the side.

From small wins to full-on ventures, this guide helps you turn AI skills into real results, without the overwhelm.

*Ad

The Mechanics of “Thinking”

The expert explains that the process is a ten-step journey from text to math and back again. It doesn’t read your sentence left-to-right like a human would. Instead, it chops your input into “tokens” (chunks of characters) and converts them into numerical vectors. The core engine, the “Transformer” network, then analyzes all these tokens at once. Using something called “positional encoding,” it maps where every word sits in the sentence structure. It’s not understanding meaning; it’s calculating relationships between numbers in a vast multi-dimensional space.

Why It Works (and Why It Hallucinates)

Here are three specific takeaways based on the author’s analysis:

The Translation Layer: The creator highlights that machines don’t speak English; they speak math. When you type a prompt, the first few steps involve splitting text into tokens and converting them into vectors. This is crucial to understand because it explains why the model sometimes gets tripped up on simple character-based tasks, like counting how many “r”s are in “strawberry.” It is processing numerical relationships representing chunks of text, not reading individual letters. Understanding this helps you realize you are interacting with a calculator, not a writer.

The Attention Spotlight: This is the “secret sauce” the original poster emphasizes in steps 5 and 6. The model uses an “Attention Mechanism” to figure out context. If you use the word “bat,” is it a flying mammal or a piece of baseball equipment? The model looks at every other token in your input simultaneously to assign weight and importance. This reinforces why your prompts need to be specific. You need to provide enough context to guide that spotlight effectively, ensuring the model focuses on the right variables before it starts generating.

The Probability Engine: The final steps reveal that the model is simply predicting the next most likely token based on patterns learned during training. It is not accessing a database of verified facts; it is recalling probability distributions. The expert points out that it builds the reply one step at a time, aiming for the “most likely” response. This explains hallucinations perfectly. If the statistically probable next word is factually wrong, the model will still choose it if the linguistic pattern fits better than the truth.

Learn AI in 5 minutes a day

This is the easiest way for a busy person wanting to learn AI in as little time as possible:

Sign up for The Rundown AI newsletter

They send you 5-minute email updates on the latest AI news and how to use it

You learn how to become 2x more productive by leveraging AI

*Ad

Other awesome AI guides you may enjoy

The Nuance

The most important thing to remember from this breakdown is that the AI doesn’t “know” anything. The author describes it as predicting, not understanding. While it’s tempting to treat the bot like an all-knowing oracle, you are actually interacting with a prediction model that is trying to complete a pattern you started.